Understanding Vocal AI: What It Is and How It Works

Are there any vocal AI that understand vocal tones? This question has intrigued many music enthusiasts and tech-savvy individuals alike. In this article, we will delve into the world of vocal AI, exploring its capabilities, limitations, and the potential for understanding and interpreting vocal tones.

Are there any vocal AI that understand vocal tones? This question has intrigued many music enthusiasts and tech-savvy individuals alike. In this article, we will delve into the world of vocal AI, exploring its capabilities, limitations, and the potential for understanding and interpreting vocal tones.

Vocal AI, also known as voice AI, refers to artificial intelligence systems designed to analyze, process, and generate human speech. These systems have become increasingly sophisticated, enabling them to perform a wide range of tasks, from speech recognition to voice synthesis.

How Vocal AI Understands Vocal Tones

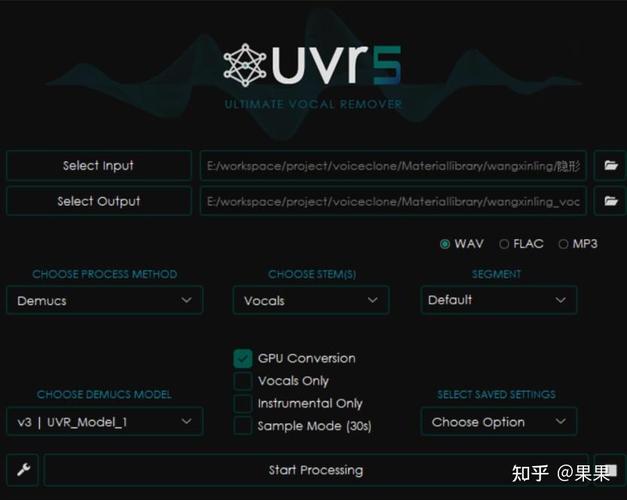

To answer the question of whether there are vocal AI that understand vocal tones, we must first understand how these systems work. Vocal AI typically relies on a combination of machine learning algorithms and deep neural networks to analyze and interpret audio signals.

To answer the question of whether there are vocal AI that understand vocal tones, we must first understand how these systems work. Vocal AI typically relies on a combination of machine learning algorithms and deep neural networks to analyze and interpret audio signals.

One of the key components of vocal AI is the use of mel-frequency cepstral coefficients (MFCCs). MFCCs are a set of numerical coefficients that represent the frequency and amplitude content of an audio signal. By analyzing MFCCs, vocal AI can identify and categorize different vocal tones, such as vowels, consonants, and even emotional expressions.

Types of Vocal AI Systems

There are several types of vocal AI systems, each with its unique approach to understanding vocal tones. Here are some of the most common types:

There are several types of vocal AI systems, each with its unique approach to understanding vocal tones. Here are some of the most common types:

-

Speech Recognition Systems

-

Text-to-Speech (TTS) Systems

-

Music Generation Systems

-

Emotion Recognition Systems

Speech Recognition Systems

Speech recognition systems are designed to convert spoken words into written text. These systems can often understand and interpret various vocal tones, making them useful for applications such as transcription services and voice assistants.

One popular speech recognition system is Google’s Speech-to-Text API. This system uses a combination of deep learning and neural networks to analyze audio signals and transcribe them into text. While it may not be perfect in understanding all vocal tones, it has shown remarkable progress in this area.

Text-to-Speech (TTS) Systems

Text-to-speech systems are designed to generate spoken words from written text. These systems can often produce a wide range of vocal tones, depending on the quality of the text and the sophistication of the AI model.

One notable TTS system is Amazon Polly, which uses advanced deep learning techniques to generate realistic-sounding speech. While Polly may not fully understand the nuances of vocal tones, it can produce a variety of tones and emotions, making it a valuable tool for applications such as audiobooks and voiceovers.

Music Generation Systems

Music generation systems are designed to create music based on various parameters, including vocal tones. These systems can often generate a wide range of vocal tones, making them useful for composers and musicians.

One popular music generation system is AIVA (Artificial Intelligence Virtual Artist), which uses deep learning algorithms to generate music. AIVA can produce a variety of vocal tones, making it a valuable tool for composers looking to experiment with new sounds.

Emotion Recognition Systems

Emotion recognition systems are designed to identify and interpret emotional expressions in speech. These systems can often detect subtle changes in vocal tones, such as pitch, intensity, and rhythm, to determine the emotional state of the speaker.

One notable emotion recognition system is Affectiva, which uses a combination of machine learning and computer vision to analyze facial expressions and vocal tones. While Affectiva may not be perfect in understanding all vocal tones, it has shown remarkable progress in this area.

Conclusion

In conclusion, there are indeed vocal AI systems that can understand and interpret vocal tones. While these systems may not be perfect, they have made significant progress in this area and continue to evolve. As technology advances, we can expect even more sophisticated vocal AI systems to emerge, capable of understanding the nuances of human speech and emotion.